Winutils Exe Hadoop Download Vm

Now, lets play with some hbase commands. We’ll start with a basic scan that returns all columns in the cars table. Using a long column family name, such as columnfamily1 is a horrible idea in production. How to install Apache Spark on Windows 10. To do that go to this page and download the latest version of the JDK. After you install it. Hadoop WinUtils. Since we are using a pre-built Spark binaries for Hadoop, we need also additional binary files to run it. Apache Spark installation on Windows 10. For any application that uses the Java Virtual Machine is always recommended to install the appropriate java version. In this case I just updated my java version as follows. Download winutils.exe. This was the critical point for me, because I downloaded one version and did not work until I.

If you were confused by Spark's quick-start guide, this article contians resolutions to the more common errors encountered by developers.

Join the DZone community and get the full member experience.

Join For FreeThis article is for the Java developer who wants to learn Apache Spark but don't know much of Linux, Python, Scala, R, and Hadoop. Around 50% of developers are using Microsoft Windows environment for development, and they don't need to change their development environment to learn Spark. This is the first article of a series, 'Apache Spark on Windows', which covers a step-by-step guide to start the Apache Spark application on Windows environment with challenges faced and thier resolutions.

A Spark Application

A Spark application can be a Windows-shell script or it can be a custom program in written Java, Scala, Python, or R. You need Windows executables installed on your system to run these applications. Scala statements can be directly entered on CLI 'spark-shell'; however, bundled programs need CLI 'spark-submit.' These CLIs come with the Windows executables.

Download and Install Spark

Download Spark from https://spark.apache.org/downloads.html and choose 'Pre-built for Apache Hadoop 2.7 and later'

Unpack spark-2.3.0-bin-hadoop2.7.tgz in a directory.

Clearing the Startup Hurdles

You may follow the Spark's quick start guide to start your first program. However, it is not that straightforward, andyou will face various issues as listed below, along with their resolutions.

Please note that you must have administrative permission to the user or you need to run command tool as administrator.

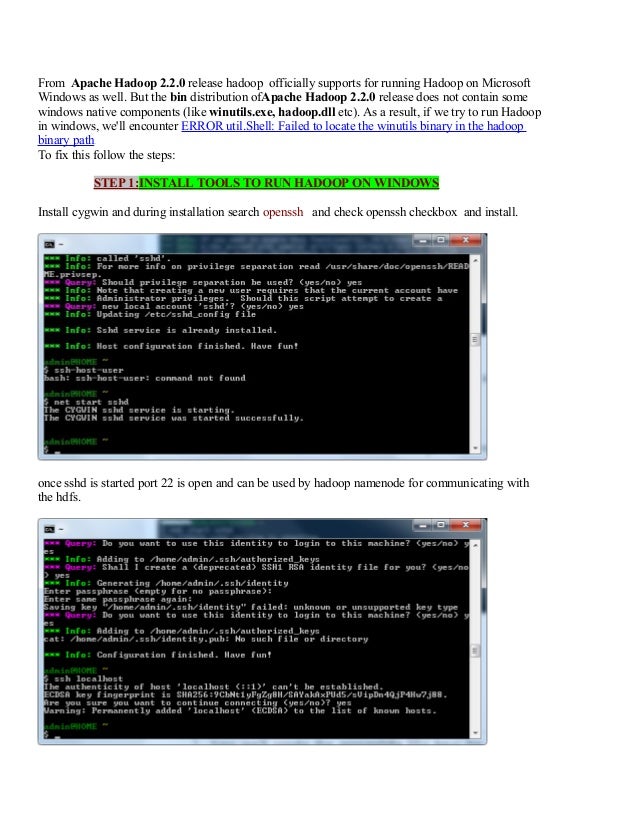

Issue 1: Failed to Locate winutils Binary

Even if you don't use Hadoop, Windows needs Hadoop to initialize the 'hive' context. You get the following error if Hadoop is not installed.

This can be fixed by adding a dummy Hadoop installation. Spark expects winutils.exe in the Hadoop installation '<Hadoop Installation Directory>/bin/winutils.exe' (note the 'bin' folder).

Download Hadoop 2.7's winutils.exe and place it in a directory C:InstallationsHadoopbin

Now set HADOOP_HOME = C:InstallationsHadoop environment variables.

Now start the Windows shell; you may get few warnings, which you may ignore for now.

Issue 2: File Permission Issue for /tmp/hive

Let's run the first program as suggested by Spark's quick start guide. Don't worry about the Scala syntax for now.

You may ignore plugin's warning for now, but '/tmp/hive on HDFS should be writable' should be fixed.

This can be fixed by changing permissions on '/tmp/hive' (which is C:/tmp/hive) directory using winutils.exe as follows. You may run basic Linux commands on Windows using winutils.exe.

Issue 3: Failed to Start Database 'metastore_db'

If you run the same command ' val textFile = spark.read.textFile('README.md')' again you may get following exception :

This can be fixed just be removing the 'metastore_db' directory from Windows installation 'C:/Installations/spark-2.3.0-bin-hadoop2.7' and running it again.

Run Spark Application on spark-shell

Run your first program as suggested by Spark's quick start guide.

DataSet: 'org.apache.spark.sql.Dataset' is the primary abstraction of Spark. Dataset maintains a distributed collection of items. In the example below, we will create Dataset from a file and perform operations on it.

SparkSession: This is entry point to Spark programming. 'org.apache.spark.sql.SparkSession'.

Start the spark-shell.

Spark shell initializes a Windowscontext 'sc' and Windowssession named 'spark'. We can get the DataFrameReader from the session which can read a text file, as a DataSet, where each line is read as an item of the dataset. Following Scala commands creates data set named 'textFile' and then run operations on dataset such as count() , first() , and filter().

Some more operations of map(), reduce(), collect().

Run Spark Application on spark-submit

In the last example, we ran the Windows application as Scala script on 'spark-shell', now we will run a Spark application built in Java. Unlike spark-shell, we need to first create a SparkSession and at the end, the SparkSession must be stopped programmatically.

Look at the below SparkApp.Java it read a text file and then count the number of lines.

Create above Java file in a Maven project with following pom dependencies :

Build the Maven project it will generate jar artifact 'target/spark-test-0.0.1-SNAPSHOT.jar'

Now submit this Windows application to Windows as follows: (Excluded some logs for clarity)

Congratulations! You are done with your first Windows application on Windows environment.

In the next article, we will talk about Spark's distributed caching and how it works with real-world examples in Java. Happy Learning!

Like This Article? Read More From DZone

Opinions expressed by DZone contributors are their own.

I am trying to setup Apache Spark on Windows.

After searching a bit, I understand that the standalone mode is what I want.Which binaries do I download in order to run Apache spark in windows? I see distributions with hadoop and cdh at the spark download page.

I don't have references in web to this. A step by step guide to this is highly appreciated.

Mukesh Ram10 Answers

I found the easiest solution on Windows is to build from source.

You can pretty much follow this guide: http://spark.apache.org/docs/latest/building-spark.html

Download and install Maven, and set MAVEN_OPTS to the value specified in the guide.

But if you're just playing around with Spark, and don't actually need it to run on Windows for any other reason that your own machine is running Windows, I'd strongly suggest you install Spark on a linux virtual machine. The simplest way to get started probably is to download the ready-made images made by Cloudera or Hortonworks, and either use the bundled version of Spark, or install your own from source or the compiled binaries you can get from the spark website.

Steps to install Spark in local mode:

Install Java 7 or later.To test java installation is complete, open command prompt type

javaand hit enter.If you receive a message'Java' is not recognized as an internal or external command.You need to configure your environment variables,JAVA_HOMEandPATHto point to the path of jdk.Download and install Scala.

Set

SCALA_HOMEinControl PanelSystem and SecuritySystemgoto 'Adv System settings' and add%SCALA_HOME%binin PATH variable in environment variables.Install Python 2.6 or later from Python Download link.

- Download SBT. Install it and set

SBT_HOMEas an environment variable with value as<<SBT PATH>>. - Download

winutils.exefrom HortonWorks repo or git repo. Since we don't have a local Hadoop installation on Windows we have to downloadwinutils.exeand place it in abindirectory under a createdHadoophome directory.SetHADOOP_HOME = <<Hadoop home directory>>in environment variable. We will be using a pre-built Spark package, so choose a Spark pre-built package for Hadoop Spark download. Download and extract it.

Set

SPARK_HOMEand add%SPARK_HOME%binin PATH variable in environment variables.Run command:

spark-shellOpen

http://localhost:4040/in a browser to see the SparkContext web UI.

You can download spark from here:

I recommend you this version: Hadoop 2 (HDP2, CDH5)

Since version 1.0.0 there are .cmd scripts to run spark in windows.

Unpack it using 7zip or similar.

To start you can execute /bin/spark-shell.cmd --master local[2]

To configure your instance, you can follow this link: http://spark.apache.org/docs/latest/

You can use following ways to setup Spark:

- Building from Source

- Using prebuilt release

Though there are various ways to build Spark from Source.

First I tried building Spark source with SBT but that requires hadoop. To avoid those issues, I used pre-built release.

Instead of Source,I downloaded Prebuilt release for hadoop 2.x version and ran it.For this you need to install Scala as prerequisite.

I have collated all steps here :

How to run Apache Spark on Windows7 in standalone mode

Hope it'll help you..!!!

Nishu TayalNishu TayalTrying to work with spark-2.x.x, building Spark source code didn't work for me.

So, although I'm not going to use Hadoop, I downloaded the pre-built Spark with hadoop embeded :

spark-2.0.0-bin-hadoop2.7.tar.gzPoint SPARK_HOME on the extracted directory, then add to

PATH:;%SPARK_HOME%bin;Download the executable winutils from the Hortonworks repository, or from Amazon AWS platform winutils.

Create a directory where you place the executable winutils.exe. For example, C:SparkDevx64. Add the environment variable

%HADOOP_HOME%which points to this directory, then add%HADOOP_HOME%binto PATH.Using command line, create the directory:

Using the executable that you downloaded, add full permissions to the file directory you created but using the unixian formalism:

Type the following command line:

Scala command line input should be shown automatically.

Remark : You don't need to configure Scala separately. It's built-in too.

FarahFarahHere's the fixes to get it to run in Windows without rebuilding everything - such as if you do not have a recent version of MS-VS. (You will need a Win32 C++ compiler, but you can install MS VS Community Edition free.)

I've tried this with Spark 1.2.2 and mahout 0.10.2 as well as with the latest versions in November 2015. There are a number of problems including the fact that the Scala code tries to run a bash script (mahout/bin/mahout) which does not work of course, the sbin scripts have not been ported to windows, and the winutils are missing if hadoop is not installed.

(1) Install scala, then unzip spark/hadoop/mahout into the root of C: under their respective product names.

(2) Rename mahoutbinmahout to mahout.sh.was (we will not need it)

(3) Compile the following Win32 C++ program and copy the executable to a file named C:mahoutbinmahout (that's right - no .exe suffix, like a Linux executable)

(4) Create the script mahoutbinmahout.bat and paste in the content below, although the exact names of the jars in the _CP class paths will depend on the versions of spark and mahout. Update any paths per your installation. Use 8.3 path names without spaces in them. Note that you cannot use wildcards/asterisks in the classpaths here.

The name of the variable MAHOUT_CP should not be changed, as it is referenced in the C++ code.

Of course you can comment-out the code that launches the Spark master and worker because Mahout will run Spark as-needed; I just put it in the batch job to show you how to launch it if you wanted to use Spark without Mahout.

(5) The following tutorial is a good place to begin:

You can bring up the Mahout Spark instance at:

The guide by Ani Menon (thx!) almost worked for me on windows 10, i just had to get a newer winutils.exe off that git (currently hadoop-2.8.1): https://github.com/steveloughran/winutils

Here are seven steps to install spark on windows 10 and run it from python:

Step 1: download the spark 2.2.0 tar (tape Archive) gz file to any folder F from this link - https://spark.apache.org/downloads.html. Unzip it and copy the unzipped folder to the desired folder A. Rename the spark-2.2.0-bin-hadoop2.7 folder to spark.

Let path to the spark folder be C:UsersDesktopAspark

Step 2: download the hardoop 2.7.3 tar gz file to the same folder F from this link - https://www.apache.org/dyn/closer.cgi/hadoop/common/hadoop-2.7.3/hadoop-2.7.3.tar.gz. Unzip it and copy the unzipped folder to the same folder A. Rename the folder name from Hadoop-2.7.3.tar to hadoop.Let path to the hadoop folder be C:UsersDesktopAhadoop

Step 3: Create a new notepad text file. Save this empty notepad file as winutils.exe (with Save as type: All files). Copy this O KB winutils.exe file to your bin folder in spark - C:UsersDesktopAsparkbin

Step 4: Now, we have to add these folders to the System environment.

4a: Create a system variable (not user variable as user variable will inherit all the properties of the system variable) Variable name: SPARK_HOMEVariable value: C:UsersDesktopAspark

Find Path system variable and click edit. You will see multiple paths. Do not delete any of the paths. Add this variable value - ;C:UsersDesktopAsparkbin

Hadoop Vm Download

4b: Create a system variable

Variable name: HADOOP_HOMEVariable value: C:UsersDesktopAhadoop

Find Path system variable and click edit. Add this variable value - ;C:UsersDesktopAhadoopbin

4c: Create a system variable Variable name: JAVA_HOMESearch Java in windows. Right click and click open file location. You will have to again right click on any one of the java files and click on open file location. You will be using the path of this folder. OR you can search for C:Program FilesJava. My Java version installed on the system is jre1.8.0_131.Variable value: C:Program FilesJavajre1.8.0_131bin

Find Path system variable and click edit. Add this variable value - ;C:Program FilesJavajre1.8.0_131bin

Step 5: Open command prompt and go to your spark bin folder (type cd C:UsersDesktopAsparkbin). Type spark-shell.

It may take time and give some warnings. Finally, it will show welcome to spark version 2.2.0

Step 6: Type exit() or restart the command prompt and go the spark bin folder again. Type pyspark:

It will show some warnings and errors but ignore. It works.

Step 7: Your download is complete. If you want to directly run spark from python shell then:go to Scripts in your python folder and type

in command prompt.

In python shell

import the necessary modules

If you would like to skip the steps for importing findspark and initializing it, then please follow the procedure given in importing pyspark in python shell

Here is a simple minimum script to run from any python console.It assumes that you have extracted the Spark libraries that you have downloaded into C:Apachespark-1.6.1.

This works in Windows without building anything and solves problems where Spark would complain about recursive pickling.

HansHarhoffHansHarhoffCloudera and Hortonworks are the best tools to start up with the HDFS in Microsoft Windows. You can also use VMWare or VBox to initiate Virtual Machine to establish build to your HDFS and Spark, Hive, HBase, Pig, Hadoop with Scala, R, Java, Python.

protected by Community♦Jan 30 '17 at 22:30

Thank you for your interest in this question. Because it has attracted low-quality or spam answers that had to be removed, posting an answer now requires 10 reputation on this site (the association bonus does not count).

Would you like to answer one of these unanswered questions instead?